Activity 2 - Exascale Workflow Scalability

Earth system modeling becomes more and more data-limited, both in terms of data volume and throughput. As part of a common vision for exascale Earth system modeling in the Helmholtz Association, the activity group Exascale Workflow Scalability rethinks traditional ways of storing, analyzing, and sharing data to enable scalable and performance-portable solutions for big data workflows on modern supercomputing architectures. Novel object storage concepts allowing for fast data access, cloud solutions for data services, and data mining and ML methods to automatically annotate and enrich data with descriptive metadata will be further explored.

The activity group pursues the following goals with respect to extreme data processing: (i) promotion of data-intensive sciences: Simulation and Data Labs (SDL) enable the integration of HPC and data science into data-intensive computing, (ii) interdisciplinary partnerships: We strive to achieve a new level of quality of data analysis and data integration, flexible and sustainable for use on next-generation HPC systems, and (iii) Generation of knowledge from data and models The activity group supports research data management in federated data infrastructures, in line with open science and FAIR data principles. Crucial data and workflow topics were already picked up within the PilotLab ExaESM and will be further exploited in Joint Lab ExaESM.

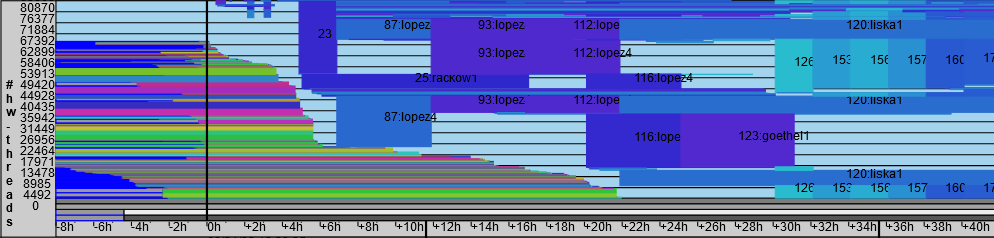

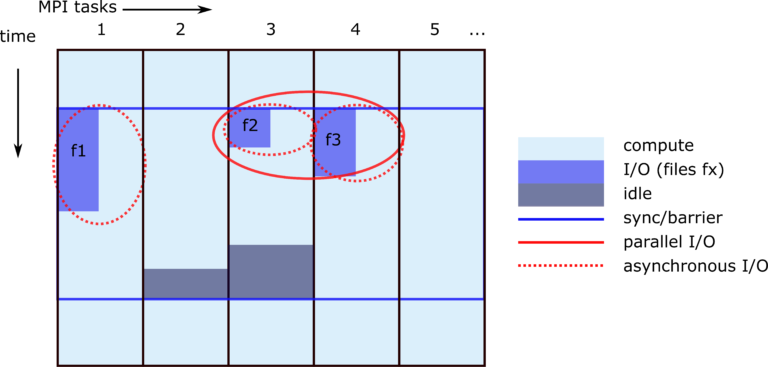

Asynchronous scheduling and exascale workflows

Interactive large-scale analysis workflows

HPC resources are precious and have to be shared between diverse modelling workflows. Job schedulers need to be adapted for efficient processing of hybrid dimulations on modular supercomputing architectures. Interactive applications for HPC related workflows will be supported by developing and sharing expertise on Jupyter notebook and container usage in earth system modelling.