Topic 3: Hierarchical data architecture co-design for data-intensive simulations

Description

In topic 3 we analyse the staging behaviour and performance of data-intensive Earth System models (ESM) and their specific needs and potential bottlenecks. A focus will be placed on complex ESM applications, such as simulating strongly coupled non-linear processes within Earth System compartments, and data assimilation purposes. This topic therefore provides a technical basis for I/O related scalability and performance improvements.

Impact of ad-hoc filesystems on ESM performance

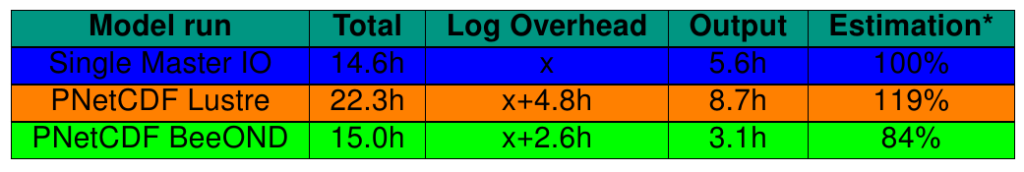

For our analysis we mainly use the ESM simulation code EMAC. So far, the simulations are running in parallel (MPI) on 80 nodes on the ForHLR II supercomputer at SCC. Performance analysis (Scalasca / Score-P) showed that I/O is getting a bottleneck when more and more nodes are used. A closer look into I/O statistics (Darshan) revealed the reasons for it: Each node is doing a small chunk of output (parallel netcdf). On the standard filesystem (Lustre) this I/O-strategy causes a lot of overhead. Tests with the same code but doing output on the ad-hoc filesystem BeeOND showed that the simulation is getting much faster (see table).

Main reasons are the usage of SSDs (can handle more I/O-operations in a short time) and the exclusive use of the filesystem instead of having to share the bandwidth with other users. An example for the wall-time used in each time step in the simulation can be found in figure 1.

ICON 2.5 did not show any differences between using the Lustre- and BeeOND-filesystem.

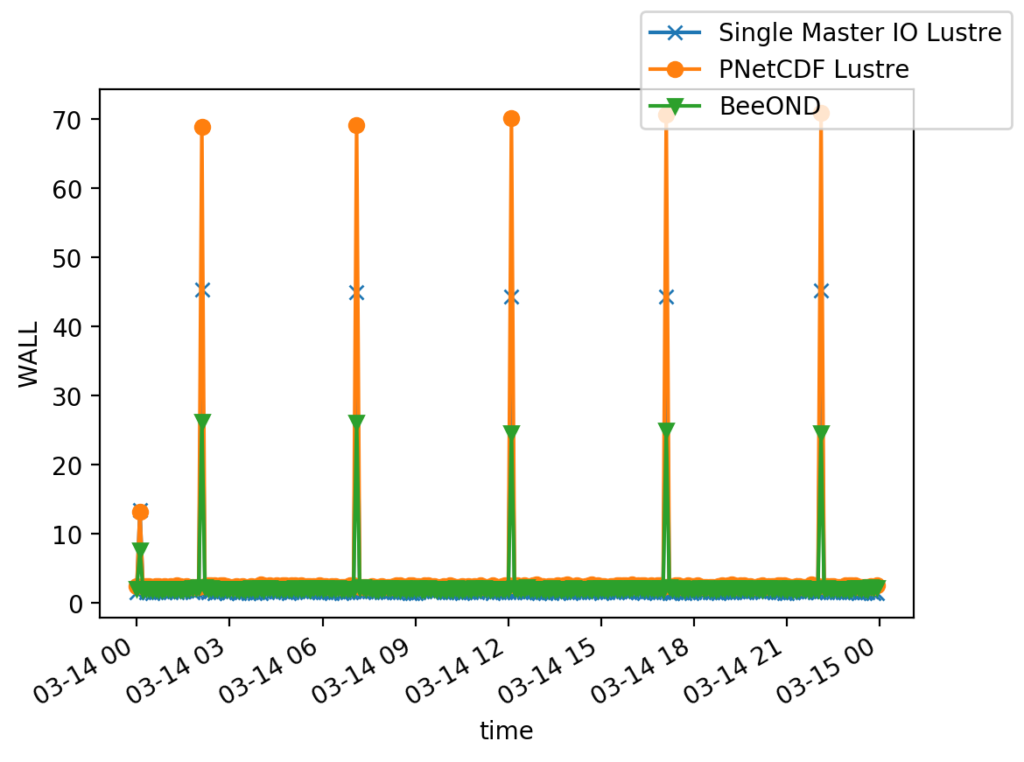

Performance increase by compression of output

Visualization and I/O

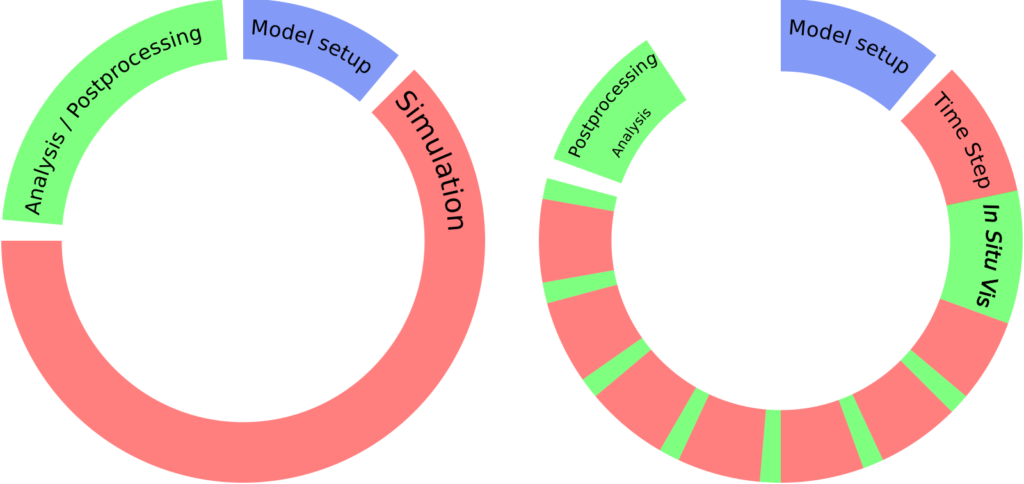

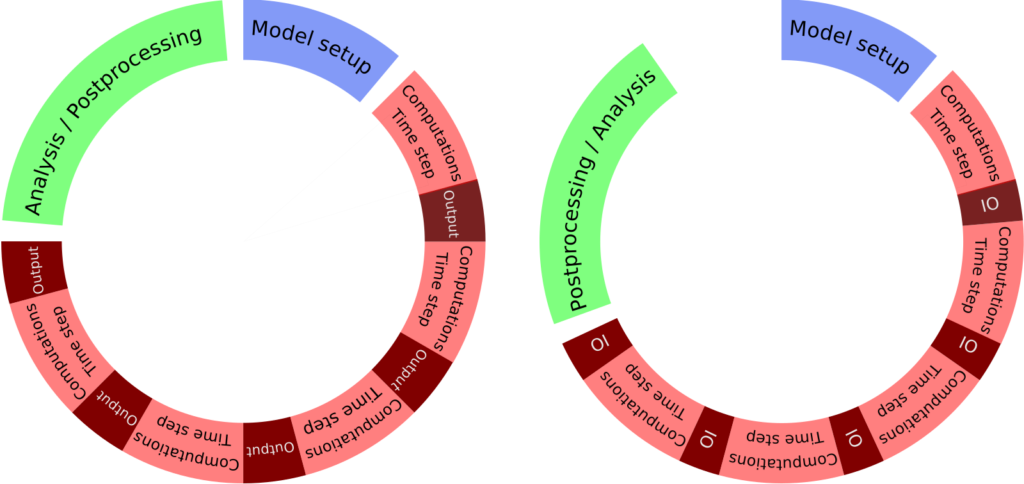

Typically, modeling proceeds in the three phases of model creation, model simulation and model evaluation, as shown on the left side of figure 3. Often, the knowledge gained from the evaluation is incorporated into an improved model. This is also referred to as the modeling cycle. The goal within the PL-ExaESM project is to shorten the modeling cycle in order to be able to answer pressing questions as quickly as possible. To this end, two promising approaches were selected the overlapping of the simulation and evaluation phases through In Situ visualization (see Fig. 3 ) and the improvement of the I/O (see Fig. 4).

In order to use the In Situ visualization an interface to ParaView Catalyst was implemented. The particular In Situ visualization can be configured via a python script. With this approach, amounts of data that need to be saved for test examples could be reduced up to 90%. The time to finish phases two and three (simulation and postprocessing/analysis) of the model development cycle was reduced by using the In Situ visualization to 50%.

In preparing for exascale readiness the strong scalability of OpenGeoSys was studied. Instrumentation of OpenGeoSys with Darshan revealed a significant bottleneck in the file I/O. With the usage of the hierarchical data format (HDF5) and underlying message passing interface (MPI) I/O layer the performance of the file I/O and subsequently the overall scalability characteristics of OpenGeoSys were improved. Further possibilities to achieve a more efficient usage of computational resources will be studied.

ESMs considered in this topic

The ECHAM/MESSy Atmospheric Chemistry (EMAC) model is a numerical chemistry and climate simulation system that includes sub-models describing tropospheric and middle atmosphere processes and their interaction with oceans, land and human influences (Jöckel et al., 2010). It uses the second version of the Modular Earth Submodel System (MESSy2) to link multi-institutional computer codes. The core atmospheric model is the 5th generation European Centre Hamburg general circulation model (ECHAM5, Roeckner et al., 2006).

ICON is the icosahedral nonhydrostatic weather and climate model from the German Weather Service and the Max Planck Institute for Meteorology with contributions from additional institutions. In our tests we used the benchmark version that for example is used to benchmark new HPC-systems at KIT-SCC.

The open-source software OpenGeoSys is designed precisely to simulate the coupled thermal, hydraulic, mechanical and bio-chemical (THM/BC) processes in fractured porous media.